Modern enterprises are increasingly adopting microservices architecture to improve agility, accelerate release cycles, and support digital transformation. Microservices allow development teams to build and deploy independently, update components quickly, and scale services efficiently. However, the realities of testing microservices at scale often reveal hidden quality risks that organizations do not plan for early enough.

Most teams invest in development speed but do not invest equally in robust testing strategies. As a result, enterprises encounter performance issues, reliability gaps, and customer dissatisfaction.

This blog explores the key quality risks organizations face when testing microservices at scale and outlines best practices to address them.

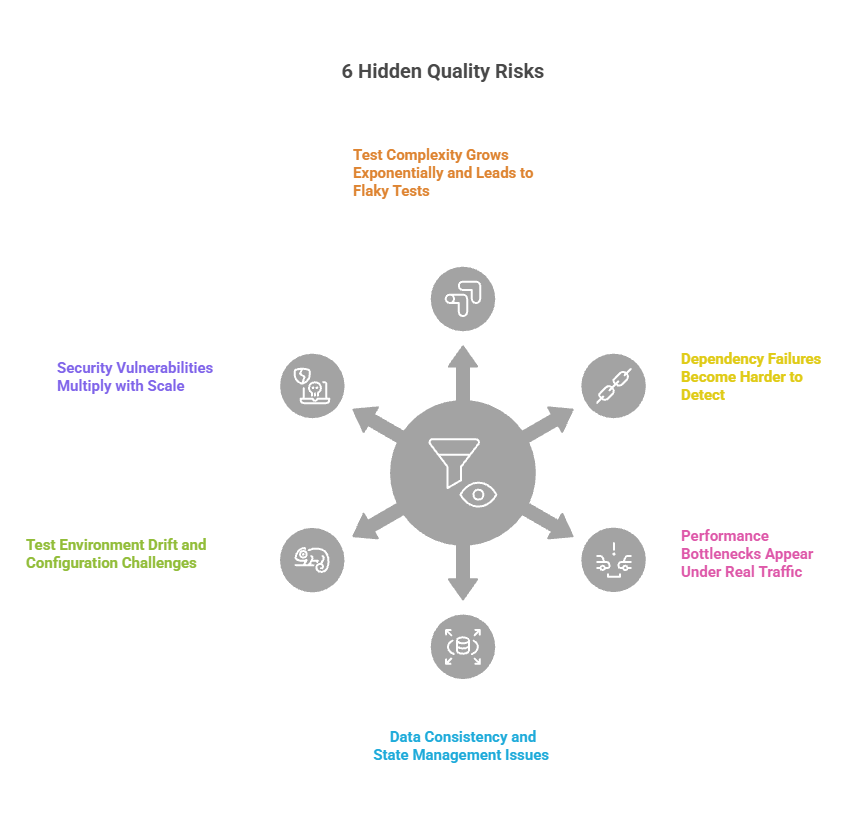

1. Test Complexity Grows Exponentially and Leads to Flaky Tests

Microservices break monolithic applications into dozens or hundreds of small services. Each service has its own API, dependencies, and failure modes. This fragmentation increases the number of test combinations.

The fundamental problem is that a microservices ecosystem has far more interactions and states than a monolith. As complexity grows, test suites also expand and become more fragile. Flaky tests, which produce inconsistent results across runs, are a common symptom of this fragility and often mask real defects.

Teams can no longer rely on traditional end-to-end test suites alone. They need:

- Contract testing between services

- Component and integration testing

- Environment simulation for downstream dependencies

If these practices are missing, defects may hide in service interactions while unreliable test results reduce confidence in overall test coverage.

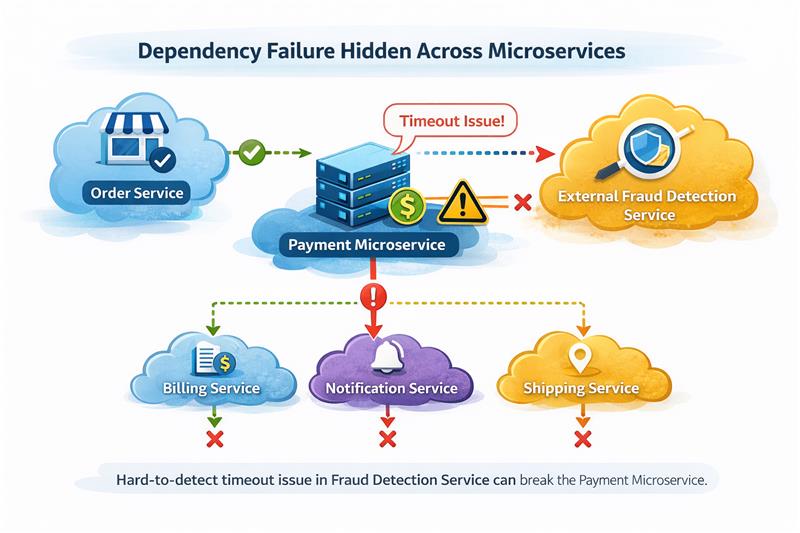

2. Dependency Failures Become Harder to Detect

When services depend on each other, failure in one service can cascade silently through the system. Detecting these failures with inadequate testing is like finding a needle in a haystack. Standard test suites often simulate only a subset of real interactions.

For example, a payment microservice may fail intermittently due to timeout issues from an external fraud detection service. Without proper mock-based testing or service virtualization, this failure might only show up in production.

3. Performance Bottlenecks Appear Under Real Traffic

Performance testing is often overlooked in microservices testing. Traditional load testing evaluates individual services in isolation, which fails to capture how performance behaves across a distributed system.

In real-world environments, microservices interact through chained calls, shared infrastructure, and dynamic scaling mechanisms. Even minor latency in one service can propagate across multiple dependencies, resulting in slow response times, resource contention, and cascading delays that are difficult to predict in controlled test environments.

4. Data Consistency and State Management Issues

Microservices often manage their own databases or state. While this improves modularity, it introduces data consistency challenges. Ensuring eventual consistency across services complicates test strategies.

Without careful design of test data management and state verification, systems can produce inconsistent user experiences or data corruption.

5. Test Environment Drift and Configuration Challenges

One overlooked risk is environment drift. Microservices behave differently in development, staging, and production environments due to configuration, network latency, and resource limits.

Many teams run tests in environments that do not mimic production, leading to false confidence and hidden defects. Test environments must replicate production settings as closely as possible.

6. Security Vulnerabilities Multiply with Scale

Each microservice exposes an API endpoint. More endpoints mean more attack surfaces. Security testing is not optional. Without robust API security tests, access control issues, injection flaws, or unintended data exposure may occur.

Automated security testing should be included in CI/CD pipelines. Tools for static and dynamic analysis help catch vulnerabilities before they reach production.

To address this, teams are increasingly using AI-driven analysis to identify recurring patterns and high-risk service interactions that are difficult to detect manually. Improving test reliability requires isolating flaky tests, strengthening test stability, and continuously maintaining test suites as systems scale.

6 Best Practices for Testing Microservices at Scale

To address these hidden quality risks, enterprises should adopt a structured approach:

1. Contract Testing

Verify interactions between services automatically to prevent breaking changes as teams deploy independently. Contract testing ensures that service APIs remain compatible even as implementations evolve.

Common tools: Pact, Spring Cloud Contract

2. Service Virtualization

Simulate unavailable, slow, or unstable dependencies to validate system behavior under real-world conditions. Service virtualization helps teams uncover hidden dependency failures before they reach production.

Common tools: WireMock, Parasoft Virtualize

3. Performance Testing Under Load

Emulate concurrent users, distributed traffic, and peak load conditions across interconnected services. This approach reveals latency amplification and resource contention that isolated testing often misses.

Common tools: Apache JMeter, k6

4. End-to-End and Chaos Testing

Validate business workflows and system resilience by testing across service boundaries and injecting controlled failures. These tests help ensure systems degrade gracefully under stress.

Common tools: Testcontainers, Gremlin

5. Environment Parity

Automate infrastructure to closely match production environments across development and testing stages. Maintaining environment parity reduces configuration drift and prevents false confidence from passing tests.

Common tools: Docker, Kubernetes, Terraform

6. Continuous Security Scanning

Integrate API, dependency, and vulnerability scans into CI/CD pipelines to secure an expanding microservices attack surface. Continuous scanning helps detect risks early without slowing release cycles.

Common tools: OWASP ZAP, Snyk, Trivy

Conclusion

As enterprises scale microservices, quality risks increasingly arise from service interactions and runtime behavior rather than individual components. Addressing these risks requires moving beyond isolated testing to system-wide quality engineering that reflects real-world usage and failure conditions.

At Impelsys, microservices testing is approached as a continuous, intelligence-driven discipline. Our teams apply proven practices such as contract testing, service virtualization, performance engineering, and environment parity, supported by industry-standard testing and automation tools, to uncover hidden risks early and improve system resilience before release.

As systems grow more complex, AI plays a critical role in strengthening testing strategies. By leveraging AI-driven insights to identify high-risk service interactions, detect anomalous performance behavior, and reduce test flakiness, Impelsys enables teams to test smarter, scale faster, and release with confidence.

Authored by – Rinky Lahoty and Rahi Sarkar